Key Trends in Technology Used to Increase Constituent Transparency in Law Enforcement

Today we will be discussing Key Trends in Technology Used to Increase Constituent TransparencyIn...

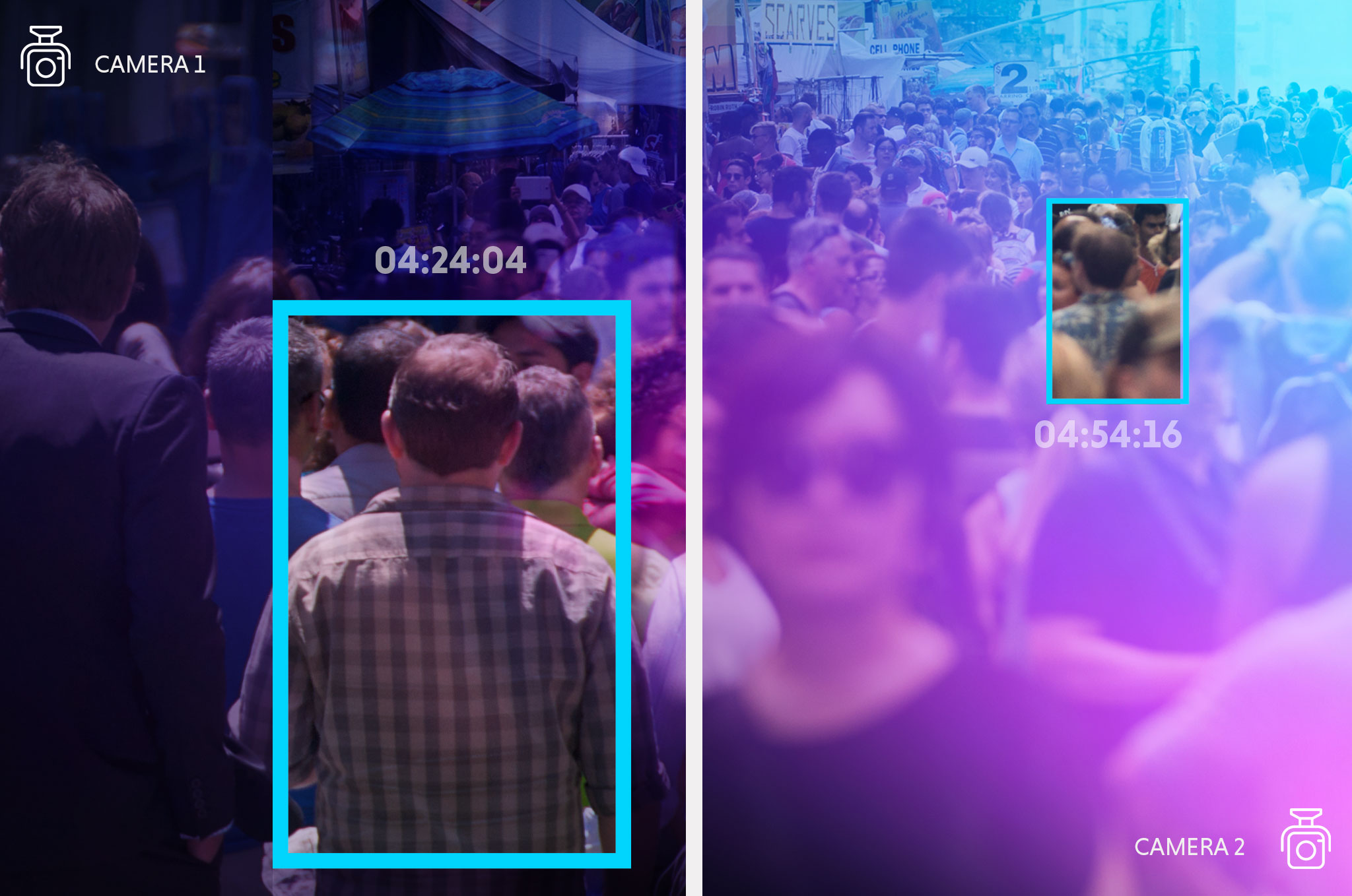

Create timelines and comprehensive stories with Tracker around a person or persons of interest faster to understand their activities and associations across separate videos.

Tracker is capable of uniquely identifying persons of interest without using PII, unlike other products that rely on facial recognition. This ensures your team remains in compliance with privacy laws.

Tracker is built on the aiWARE platform, which is used by law enforcement agencies and legal services partners across the country to help streamline investigations and eDiscovery while protecting PII.

Track a person related to criminal activity without using biometric data in public or private settings, such as public transportation stations, stadiums and arenas, public events, hospitals, and more.

Quickly find a missing person within the first 48 hours to increase chances of success, tracking movements across locations.

Follow human trafficking victims across different public or private settings to rescue them and apprehend the perpetrators.

Define a human as an object within a video file to create a profile the AI can use to surface similar-looking individuals across videos.

Process multiple videos from different sources at scale and surface moments where the person of interest you are seeking might appear.

Track a person of interest across a collection of videos from different sources, including bodyworn cameras, security footage, social media, and more.

Craft accurate timelines and stories faster regarding a person of interest’s activities and associations.

Leverage the technology to accelerate the apprehension of a suspect or return a missing person or trafficked person to safety.

Use AI ethically by remaining in compliance with privacy laws and protecting PII throughout the whole process.

Identify and define human-like objects within a frame to build the necessary criteria for AI to search against across a collection of video files.

Use AI models that aren’t biometric or facial recognition based to ensure proper oversight and protections when using AI technology in investigations.

Accelerate the analysis of video collections to extract valuable insights in building a case or when actively searching for suspects or missing persons.

Maintain compliance with privacy laws and ensure that personally identifiable information is protected throughout the entire process.

Veritone Tracker intellectual property listings: US Patent No. 10025854 GB Patent No. 2493580 US Patent No. 10282616 US Patent No. 9245247 GB Patent No. 2506172

LAW ENFORCEMENT

Transparency & Trust Report

What happens when you turn down the volume and really listen to Americans’ trust in, beliefs about, attitudes toward and expectations of police? We asked 3,000 Americans a wide-ranging set of questions about policing locally and nationally and found the results encouraging. The public is demanding more transparency than ever — and our perspective allows us to see that technology is finally mature enough to make this transparency not only possible but scalable.

Showcasing technology and stories of how Artificial Intelligence is changing the way businesses operate today and prepare for the future.

Today we will be discussing Key Trends in Technology Used to Increase Constituent TransparencyIn...